Mastering Boolean Algebra: The Foundation of Digital Circuit Design

Unlock the secrets of digital design by mastering Boolean algebra. This guide explains the core logic gates and laws, with practical examples and simulations on digisim.io to build your foundational engineering skills.

In the mid-19th century, George Boole forged a new kind of mathematics. It was an algebra not of numbers, but of ideas—a formal system for manipulating the concepts of TRUE and FALSE. At the time, it was a profound but abstract piece of intellectual architecture. Today, that architecture is the bedrock of our digital world. Every microprocessor, every memory chip, and every pixel on your screen operates according to the simple, powerful rules of Boolean algebra.

For the aspiring engineer or computer scientist, mastering these rules is not just an academic exercise. It is the single most crucial skill for designing efficient, elegant, and optimized digital circuits. Understanding this logical language allows you to see through the complexity of a circuit diagram and manipulate its very essence. If you can simplify the math, you can simplify the silicon.

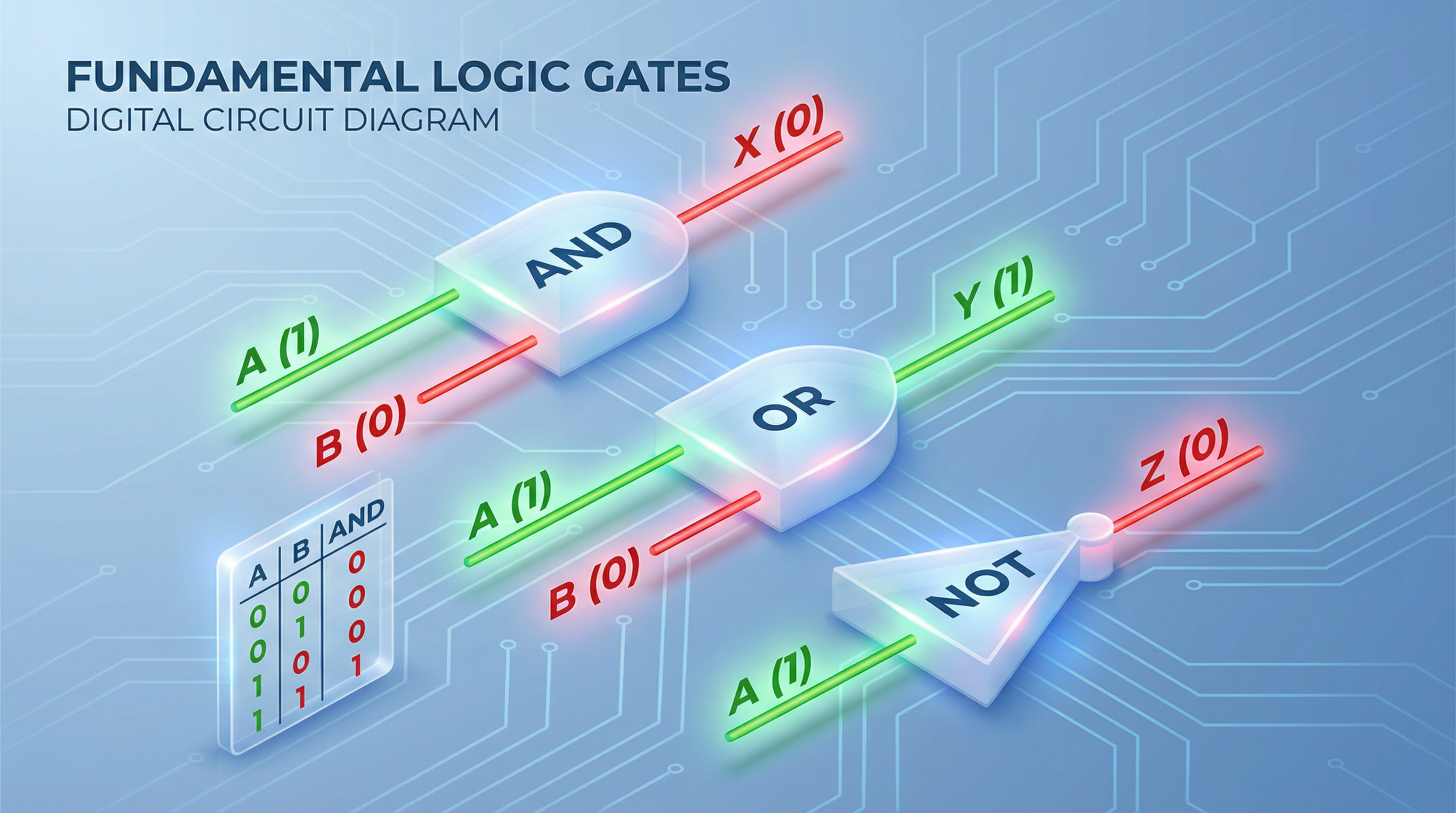

The Trinity of Logic: AND, OR, and NOT

All of digital logic, no matter how complex, is built from three primitive operations. To understand them is to understand the alphabet of digital design. In digisim.io, these are represented by discrete components that you can wire together to form complex "sentences" of logic.

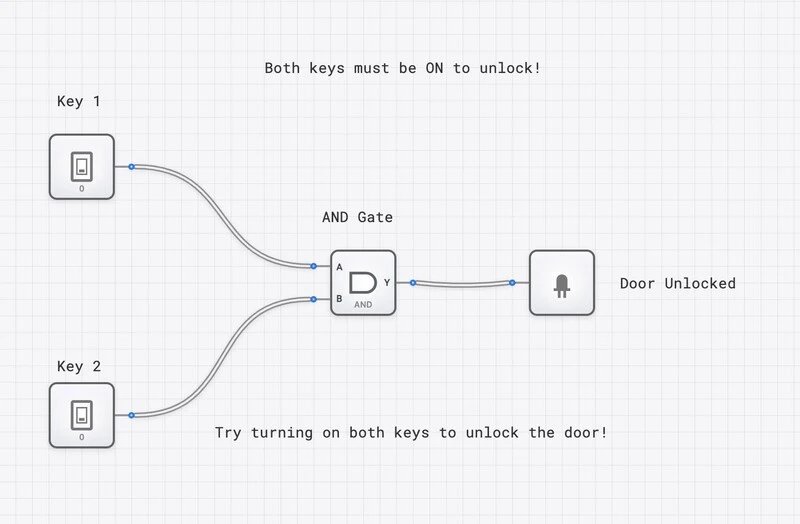

1. The AND Operation

The output is TRUE (1) only if all inputs are TRUE. Think of it as a strict requirement. If you are building a safety system for a heavy machine, you might want the motor to run only if the "Start Button" is pressed AND the "Safety Guard" is closed.

- Boolean Expression: $Y = A \cdot B$

- Component Name: AND

2. The OR Operation

The output is TRUE (1) if at least one input is TRUE. This is an inclusive condition. In a home security system, the alarm should trigger if the "Front Door Opens" OR the "Back Window Breaks."

- Boolean Expression: $Y = A + B$

- Component Name: OR

3. The NOT Operation

The output is the inverse of the single input. It is a simple inverter. If the input is high, the output is low. It’s the "contrarian" of the digital world.

- Boolean Expression: $Y = \overline{A}$ (or $A'$)

- Component Name: NOT

I chose the Component Circuit link above because when you're first learning these gates, you need a clean, isolated environment to toggle inputs and watch the truth table come to life without the distraction of a larger system.

Technical Specifications: The Truth Tables

Truth tables are the fundamental scorecard of digital logic. They map every possible input combination to its resulting output.

AND Gate Truth Table

| Input A | Input B | Output Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 0 |

| 1 | 0 | 0 |

| 1 | 1 | 1 |

OR Gate Truth Table

| Input A | Input B | Output Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 1 |

NOT Gate Truth Table

| Input A | Output Y |

|---|---|

| 0 | 1 |

| 1 | 0 |

The Foundational Axioms

With our basic operations defined, we can now explore the laws that allow us to manipulate and simplify logical expressions. These are not just abstract rules; they are circuit transformations that you will use daily. In digisim.io, applying these laws often means the difference between a circuit that fits on your screen and one that requires constant scrolling.

The Laws of Identity and Annihilation

These laws define how variables interact with the constants 0 and 1. On the digisim.io platform, you can use the CONSTANT and CONSTANT_ZERO components to verify these.

- Identity Law: A variable combined with the "identity" element for an operation remains unchanged.

- OR with 0: $A + 0 = A$

- AND with 1: $A \cdot 1 = A$

- Null (or Annihilation) Law: A variable combined with the "annihilator" element results in that element.

- OR with 1: $A + 1 = 1$ (If one input to an OR gate is already 1, the output is guaranteed to be 1).

- AND with 0: $A \cdot 0 = 0$ (If one input to an AND gate is 0, the output is guaranteed to be 0).

The Laws of Complements and Idempotence

These laws govern how a variable interacts with itself or its inverse.

- Idempotent Law: Combining a variable with itself doesn't change its value.

- $A + A = A$

- $A \cdot A = A$

- Complement Law: A variable combined with its inverse produces a constant. This is the heart of logical contradiction and tautology.

- $A + \overline{A} = 1$ (A value is always either TRUE or FALSE, so one of them will be 1).

- $A \cdot \overline{A} = 0$ (A value can never be both TRUE and FALSE at the same time).

"The Gotcha": When Boolean Logic Breaks From Convention

For anyone coming from traditional algebra, most of these laws feel familiar. Commutative ($A+B = B+A$) and Associative ($(A \cdot B) \cdot C = A \cdot (B \cdot C)$) laws work just as you'd expect. The first Distributive law, $A \cdot (B + C) = A \cdot B + A \cdot C$, is also standard.

But Boolean algebra has a trick up its sleeve—a second distributive law that has no counterpart in the algebra of real numbers.

$$A + (B \cdot C) = (A + B) \cdot (A + C)$$

This is profoundly weird at first glance. In regular math, $5 + (3 \times 4)$ is 17, while $(5+3) \times (5+4)$ is 72. They are not equal. But in the binary world of logic, this duality holds true.

I've seen countless students struggle with this because they try to "FOIL" the right side like a quadratic equation. Don't do that. Instead, think about the logic: if $A$ is 1, both sides are 1. If $A$ is 0, both sides depend entirely on $B \cdot C$. This law is a cornerstone of converting between standard forms of logic expressions (Sum-of-Products to Product-of-Sums) and is essential for advanced optimization.

The Power Tools: Absorption and De Morgan's Laws

If the previous laws are the hand tools of logic simplification, these next ones are the power tools. They eliminate entire terms and radically restructure expressions.

The Absorption Law: Eliminating Redundancy

This is one of the most useful—and satisfying—simplification laws. It allows you to delete entire gates from your design without changing the behavior.

- $A + A \cdot B = A$

- $A \cdot (A + B) = A$

Let's intuit the first form: The expression $A + A \cdot B$ is true if $A$ is true, OR if both $A$ and $B$ are true. But wait—if $A$ is already true, the entire expression is true regardless of $B$. The $A \cdot B$ term is completely redundant. In a physical circuit, that's an AND gate you just saved.

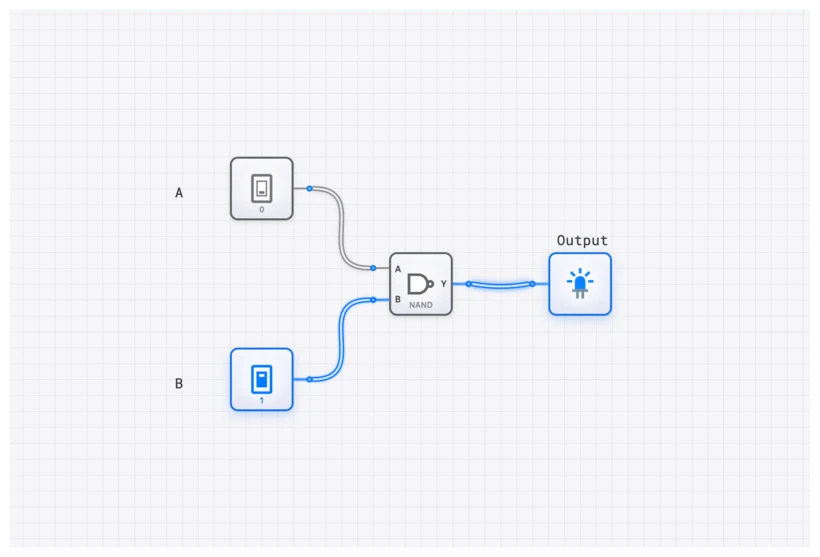

De Morgan's Laws: The Great Inverter

Augustus De Morgan's theorems provide a critical method for simplifying inverted expressions. They show how to distribute a NOT operation across ANDs and ORs.

- $\overline{A \cdot B} = \overline{A} + \overline{B}$ (The inverse of A AND B is NOT A OR NOT B).

- $\overline{A + B} = \overline{A} \cdot \overline{B}$ (The inverse of A OR B is NOT A AND NOT B).

In circuit terms, this means a NAND gate is equivalent to an OR gate with inverted inputs. This duality is fundamental to modern chip design. In CMOS technology, NAND and NOR gates are actually easier and cheaper to build than standard AND and OR gates.

Simulating on digisim.io: From Theory to Tangible Proof

Reading about these laws is one thing; seeing them work is another. Let's prove the Absorption Law, $A + A \cdot B = A$, on the digisim.io canvas. This is a classic exercise I give my first-year students to build their confidence in the math.

Step-by-Step Verification

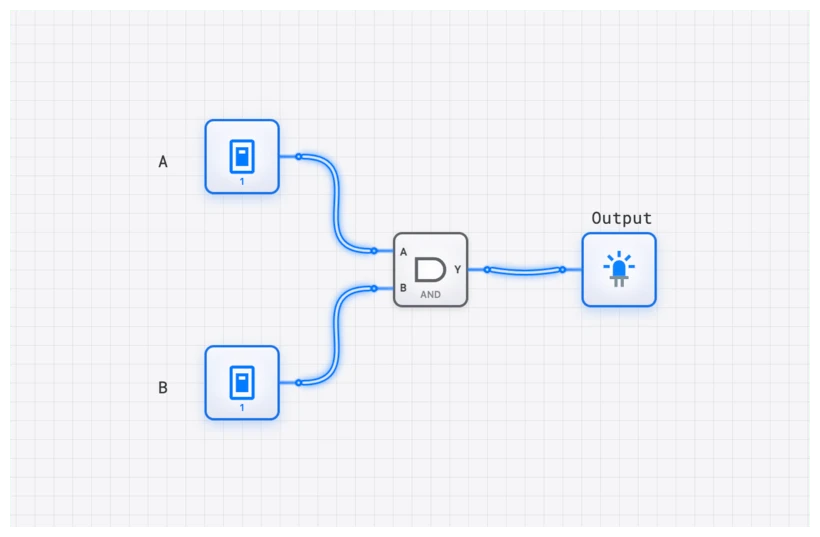

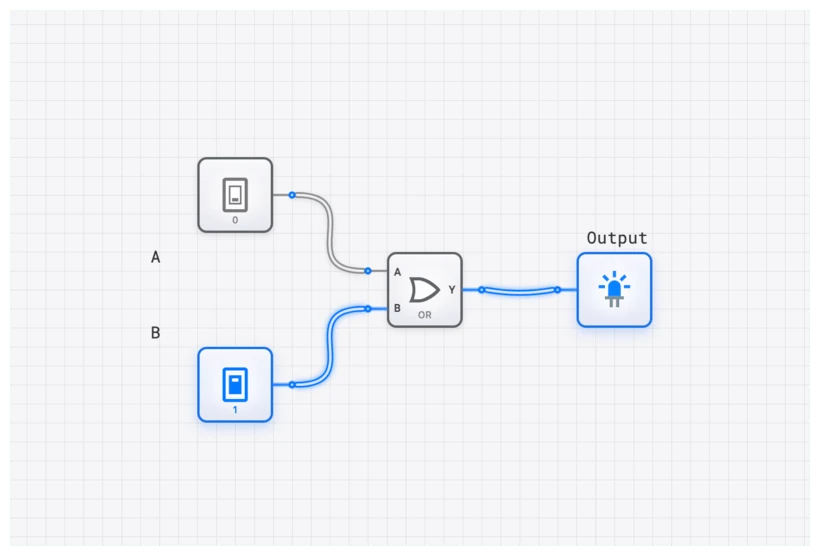

- Open the Simulator: Start a new project at digisim.io/circuits/new.

- Place Inputs: Drag two INPUT_SWITCH components onto the canvas. Label them 'A' and 'B' using the TEXT tool.

- Build the Complex Side:

- Place an AND gate. Connect 'A' and 'B' to its inputs.

- Place an OR gate. Connect the output of the AND gate to one input, and connect 'A' directly to the other input.

- Connect the OR gate's output to an OUTPUT_LIGHT.

- Build the Simplified Side:

- Simply connect 'A' directly to a second OUTPUT_LIGHT.

- The SimCast Moment: Use the SimCast feature to record your interaction. Toggle 'A' and 'B' through all four combinations. You will notice that the two lights always match. No matter what 'B' does, the first light only cares about 'A'.

Oscilloscope Verification: Watching the Propagation

To truly understand the "cost" of the unsimplified circuit, place an OSCILLOSCOPE_8CH on the canvas. Connect Channel 1 to Input 'A' and Channel 2 to the output of your complex OR gate.

When you toggle 'A', you'll see a tiny delay in the waveform on the oscilloscope. This is the propagation delay ($t_{pd}$). Even in a simulator, every gate adds a small amount of time for the signal to travel. By simplifying $A + A \cdot B$ down to just $A$, you aren't just saving space; you are removing the delay of two gates (the AND and the OR). In a CPU running at 4GHz, those nanoseconds are the difference between a stable system and a crash.

I've linked the Security Alarm template above because it demonstrates how multiple gates (AND, OR, NOT) work together in a real-world context, providing a perfect playground for applying simplification laws.

Real-World Application: Where Simplification Saves the Day

This isn't just about saving a few gates in a classroom exercise. In real-world systems, simplification directly translates to cheaper, faster, and more power-efficient hardware.

Example 1: High-Speed Memory Address Decoding

In the classic Intel 8086 architecture, the CPU uses address lines to select which memory chip to talk to. The logic that enables a specific chip is called an address decoder. If the decoder logic is unoptimized, it takes longer for the "Enable" signal to reach the RAM chip. This forces the CPU to insert "wait states"—essentially idling because the logic is too slow. By applying Boolean laws to the decoder circuitry, engineers can shave off enough delay to run the entire system at a higher clock speed.

Example 2: The ALU Status Flags

The Arithmetic Logic Unit (ALU) is the computational heart of a CPU. After an operation, it sets status flags in the FLAGS_REGISTER (like Zero, Negative, or Overflow). The logic for the Overflow flag is notoriously complex, involving the carry-in and carry-out of the most significant bits.

If you were to build this using raw logic without simplification, you'd end up with a massive web of gates. By using De Morgan's laws and the Distributive property, designers can compress this logic into a few high-speed NAND gates, ensuring the flags are ready the instant the calculation finishes.

Related Lessons in the Curriculum

If you're following our 70-Lesson Curriculum, this article expands on the core concepts found in the early stages of your journey:

- Lesson 5: Basic Logic Gates (AND, OR, NOT)

- Lesson 12: Boolean Algebra Postulates

- Lesson 15: De Morgan's Theorem in Practice

- Lesson 21: Introduction to Karnaugh Maps (The visual way to do Boolean algebra)

Your Turn: Put Knowledge into Practice

Boolean algebra is the language that bridges the gap between an abstract idea and a functioning piece of silicon. The laws are not mere suggestions; they are the mathematical tools you use to sculpt logic, eliminate waste, and create designs that are as efficient as they are correct.

The best way to internalize these concepts is to use them. Here is a challenge for you: Take the expression $Y = A \cdot B \cdot C + A \cdot B \cdot \overline{C} + A \cdot \overline{B} \cdot C$.

- Use the Distributive and Complement laws to simplify it. (Hint: It should simplify down to $Y = A \cdot (B + C)$).

- Open digisim.io and build both versions.

- Use an OSCILLOSCOPE to compare the timing.

Ready to see this in action? Head over to the simulator, build your circuits, and prove to yourself that the power of Boolean algebra is real, tangible, and essential for any serious engineer.